All the chances are that an average PC user may have never heard about supercomputers. But no big deal because these aren’t meant for gaming and social network exploiting, but for complicated task management and performance. These technology monsters are made of many processors and operated by scientists, therefore they are located in the most respectable laboratory facilities. Take a look:

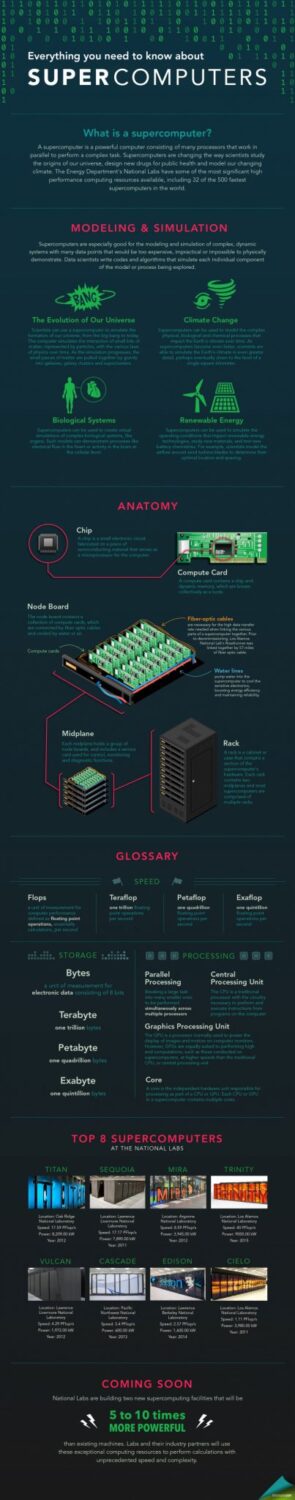

One of the things they are particularly good at is creating simulations and models of somewhat abstract environments and situations. Systems and processes that are not that easy to understand, invisible and based on theories can be embodied in supercomputer’s models, starting with the very beginning – the Big Bang theory simulation. Supercomputer can implement multiple laws of physics into the interaction of matter particles and eventually depict the creation of galaxies. Same as demonstrating past events, a supercomputer can illustrate future situations, such as climate change, allowing scientists to gain detailed knowledge of what is before us and what are planet is facing in the near future.

With the help of a supercomputer’s skills we came to better understand the human body. Organ systems can also be simulated, and downsized to the level of cell activity. Supercomputers are also used in renewable energy research, making possible all significant breakthrough and creating better life conditions for next generations.

But, hardware-wise, what does a supercomputer consist of? The smallest unit in it would be the chip, an electronic circuit serving as a microprocessor. The chip is located on a computer card along with dynamic memory, and together they make a node. The higher level is made of multiple computer cards known as node boards. Node boards are organized in midplanes, and every two of these make a rack. In simple terms, a supercomputer has a lot of racks.

The speed illustrating a computers performance skill is measured in flops, teraflops, petaflops, and exaflops. What these express is the actual number of calculations per second. Storage, however is expressed in bytes, as we all know is the unit of measurement for electronic data.

A supercomputer manages quite a lot of parallel processing. It is able to do so by dividing a large task into multiple smaller ones. Those are then dealt with by multiple processors simultaneously. Of course, a supercomputer possesses a central processing unit, where all programs’ instructions are going through, and a graphic processing unit, that enables both imagery and motion display in a much higher speed than traditional.

The National Lab’s have 32 of the 500 most powerful supercoputers in the world. Some of them are Titan (Oak Ridge, 2012, Speed 17.59 Pflop/s), Sequoia (Lawrence Livermore, 2011, Speed 17.17 Pflop/s), Mira (Argonne, 2012, Speed 8.59 Pflop/s) and others, the newest being Trinity (Los Alamos, 2015, Speed 40 Pflop/s). They are not stopping there – two more supercomputer facilities are being built as you are reading this! These upcoming machines are expected to be 5 to 10 times more potent than the existing technology, with never-before experienced abilities of complex calculations.